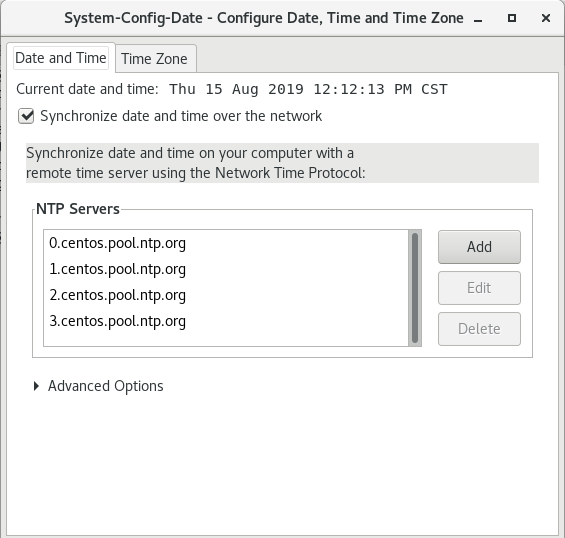

1、准备环境,IP、防火墙、SELINUX

2、安装JAVA

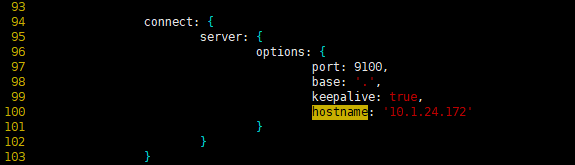

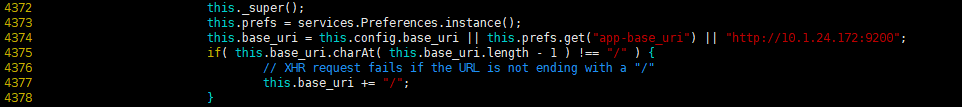

3,logstash配置

[root@n7 logstash]# ll

total 36

drwxrwxr-x 2 root root 6 Jul 24 16:00 conf.d

-rw-r–r– 1 root root 1915 Jul 24 16:00 jvm.options

-rw-r–r– 1 root root 4987 Jul 24 16:00 log4j2.properties

-rw-r–r– 1 root root 342 Jul 24 16:00 logstash-sample.conf

-rw-r–r– 1 root root 8236 Aug 21 23:36 logstash.yml

-rw-r–r– 1 root root 285 Jul 24 16:00 pipelines.yml

-rw——- 1 root root 1696 Jul 24 16:00 startup.options

[root@n7 logstash]# cp logstash.yml logstash.yml.bak

[root@n7 logstash]# vim logstash.yml

[root@n7 logstash]# grep -n ^[a-Z] /etc/logstash/logstash.yml

19:node.name: n7

28:path.data: /var/lib/logstash

77:config.reload.automatic: true

81:config.reload.interval: 10s

190:http.host: “10.1.24.71”

208:path.logs: /var/log/logstash

说明:

[root@xx ~]# grep -n ^[a-Z] /etc/logstash/logstash.yml

19:node.name: xx #节点名称,一般为主机域名

28:path.data: /var/lib/logstash #logstash和插件使用的持久化目录

77:config.reload.automatic: true #开启配置文件自动加载

81:config.reload.interval: 10s #配置文件自动加载时间间隔

190:http.host: “x.x.x.x” #定义访问主机名,一般为本机IP或者主机域名

208:path.logs: /var/log/logstash #日志目录

注意权限必须为logstash

[root@n7 logstash]# ll /usr/share/logstash/

total 848

drwxr-xr-x 2 logstash logstash 4096 Aug 21 23:36 bin

-rw-r–r– 1 logstash logstash 2276 Jul 24 16:00 CONTRIBUTORS

drwxrwxr-x 2 logstash logstash 6 Jul 24 16:00 data

-rw-r–r– 1 logstash logstash 4144 Jul 24 16:00 Gemfile

-rw-r–r– 1 logstash logstash 23109 Jul 24 16:00 Gemfile.lock

drwxr-xr-x 6 logstash logstash 84 Aug 21 23:36 lib

-rw-r–r– 1 logstash logstash 13675 Jul 24 16:00 LICENSE.txt

drwxr-xr-x 4 logstash logstash 90 Aug 21 23:36 logstash-core

drwxr-xr-x 3 logstash logstash 86 Aug 21 23:36 logstash-core-plugin-api

drwxr-xr-x 4 logstash logstash 55 Aug 21 23:36 modules

-rw-r–r– 1 logstash logstash 808305 Jul 24 16:00 NOTICE.TXT

drwxr-xr-x 3 logstash logstash 30 Aug 21 23:36 tools

drwxr-xr-x 4 logstash logstash 33 Aug 21 23:36 vendor

drwxr-xr-x 9 logstash logstash 193 Aug 21 23:36 x-pack

启动logstash

[root@n7 logstash]# systemctl restart logstash.service

[root@n7 logstash]# systemctl status logstash.service

● logstash.service – logstash

Loaded: loaded (/etc/systemd/system/logstash.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2019-08-22 00:33:30 EDT; 5s ago

Main PID: 8011 (java)

CGroup: /system.slice/logstash.service

└─8011 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.thres…

Aug 22 00:33:30 n7 systemd[1]: Started logstash.

Aug 22 00:33:30 n7 logstash[8011]: OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

启动需要一点时间,刚开始查不到端口号,稍等一下就好了

[root@n7 logstash]# ss -ntlp | grep 9600

[root@n7 logstash]# systemctl status logstash.service

● logstash.service – logstash

Loaded: loaded (/etc/systemd/system/logstash.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2019-08-22 00:33:30 EDT; 34s ago

Main PID: 8011 (java)

CGroup: /system.slice/logstash.service

└─8011 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.thres…

Aug 22 00:33:30 n7 systemd[1]: Started logstash.

Aug 22 00:33:30 n7 logstash[8011]: OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

[root@n7 logstash]# ss -ntlp | grep 9600

[root@n7 logstash]# ss -ntlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:* users:((“sshd”,pid=6754,fd=3))

LISTEN 0 100 127.0.0.1:25 *:* users:((“master”,pid=6905,fd=13))

LISTEN 0 128 :::22 :::* users:((“sshd”,pid=6754,fd=4))

LISTEN 0 100 ::1:25 :::* users:((“master”,pid=6905,fd=14))

LISTEN 0 50 ::ffff:10.1.24.71:9600 :::* users:((“java”,pid=8011,fd=70))

可以查到端口号了

[root@n7 logstash]# ss -ntlp | grep 9600

LISTEN 0 50 ::ffff:10.1.24.71:9600 :::* users:((“java”,pid=8011,fd=70))

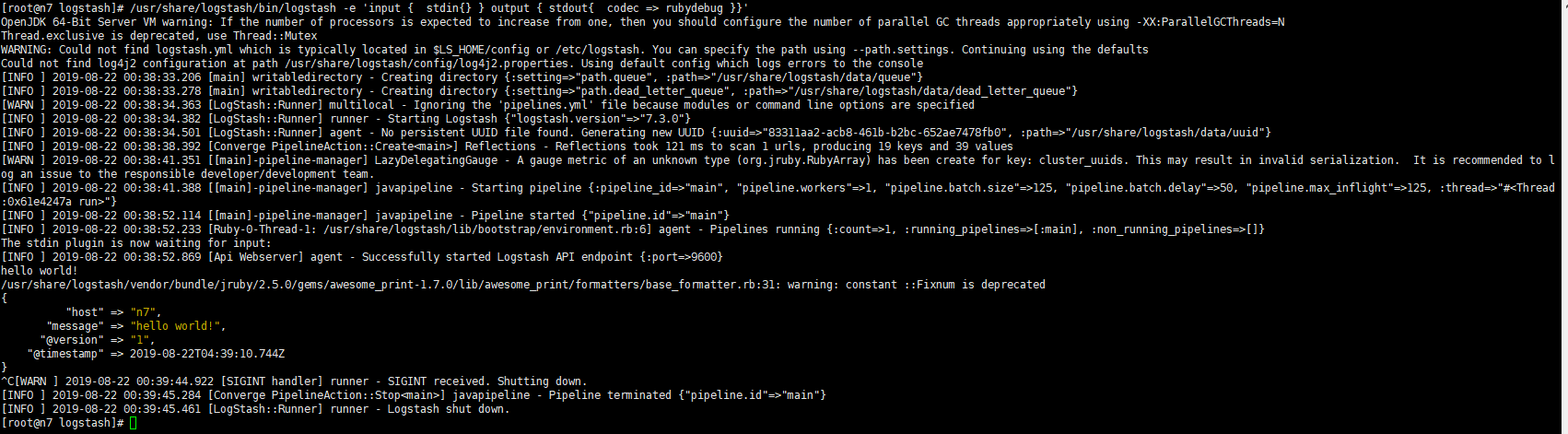

5测试标准输入输出

[root@n7 logstash]# /usr/share/logstash/bin/logstash -e ‘input { stdin{} } output { stdout{ codec => rubydebug }}’

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Thread.exclusive is deprecated, use Thread::Mutex

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using –path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2019-08-22 00:38:33.206 [main] writabledirectory – Creating directory {:setting=>”path.queue”, :path=>”/usr/share/logstash/data/queue”}

[INFO ] 2019-08-22 00:38:33.278 [main] writabledirectory – Creating directory {:setting=>”path.dead_letter_queue”, :path=>”/usr/share/logstash/data/dead_letter_queue”}

[WARN ] 2019-08-22 00:38:34.363 [LogStash::Runner] multilocal – Ignoring the ‘pipelines.yml’ file because modules or command line options are specified

[INFO ] 2019-08-22 00:38:34.382 [LogStash::Runner] runner – Starting Logstash {“logstash.version”=>”7.3.0”}

[INFO ] 2019-08-22 00:38:34.501 [LogStash::Runner] agent – No persistent UUID file found. Generating new UUID {:uuid=>”83311aa2-acb8-461b-b2bc-652ae7478fb0″, :path=>”/usr/share/logstash/data/uuid”}

[INFO ] 2019-08-22 00:38:38.392 [Converge PipelineAction::Create<main>] Reflections – Reflections took 121 ms to scan 1 urls, producing 19 keys and 39 values

[WARN ] 2019-08-22 00:38:41.351 [[main]-pipeline-manager] LazyDelegatingGauge – A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[INFO ] 2019-08-22 00:38:41.388 [[main]-pipeline-manager] javapipeline – Starting pipeline {:pipeline_id=>”main”, “pipeline.workers”=>1, “pipeline.batch.size”=>125, “pipeline.batch.delay”=>50, “pipeline.max_inflight”=>125, :thread=>”#<Thread:0x61e4247a run>”}

[INFO ] 2019-08-22 00:38:52.114 [[main]-pipeline-manager] javapipeline – Pipeline started {“pipeline.id”=>”main”}

[INFO ] 2019-08-22 00:38:52.233 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent – Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

The stdin plugin is now waiting for input:

[INFO ] 2019-08-22 00:38:52.869 [Api Webserver] agent – Successfully started Logstash API endpoint {:port=>9600}

hello world!

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

“host” => “n7”,

“message” => “hello world!”,

“@version” => “1”,

“@timestamp” => 2019-08-22T04:39:10.744Z

}

^C[WARN ] 2019-08-22 00:39:44.922 [SIGINT handler] runner – SIGINT received. Shutting down.

[INFO ] 2019-08-22 00:39:45.284 [Converge PipelineAction::Stop<main>] javapipeline – Pipeline terminated {“pipeline.id”=>”main”}

[INFO ] 2019-08-22 00:39:45.461 [LogStash::Runner] runner – Logstash shut down.

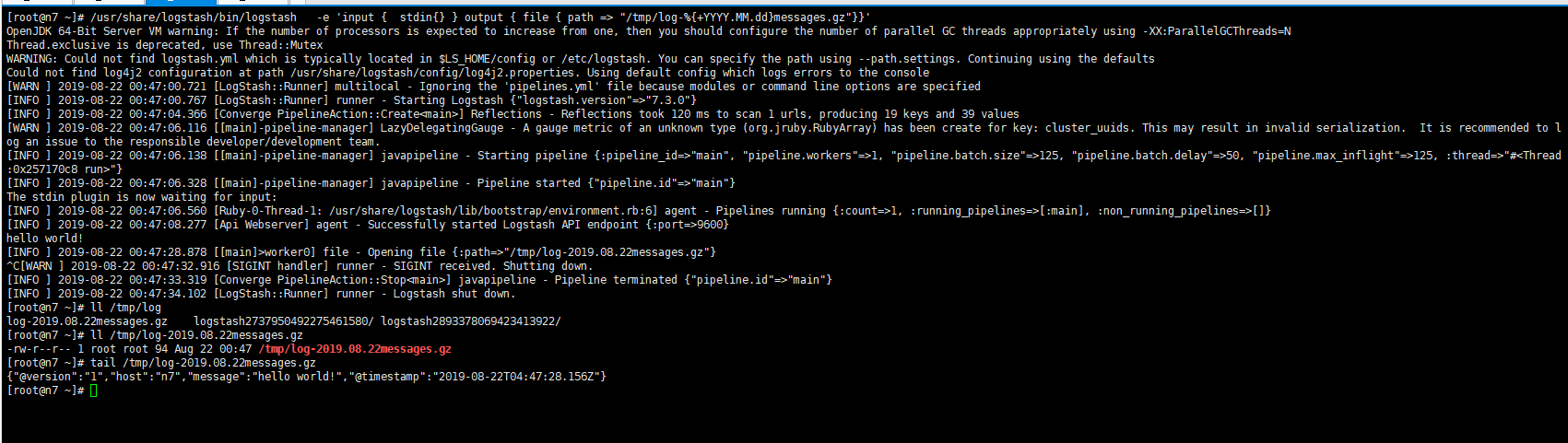

6测试输出到文件

/usr/share/logstash/bin/logstash -e ‘input { stdin{} } output { file { path => “/tmp/log-%{+YYYY.MM.dd}messages.gz”}}’

[root@n7 ~]# /usr/share/logstash/bin/logstash -e ‘input { stdin{} } output { file { path => “/tmp/log-%{+YYYY.MM.dd}messages.gz”}}’

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Thread.exclusive is deprecated, use Thread::Mutex

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using –path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2019-08-22 00:47:00.721 [LogStash::Runner] multilocal – Ignoring the ‘pipelines.yml’ file because modules or command line options are specified

[INFO ] 2019-08-22 00:47:00.767 [LogStash::Runner] runner – Starting Logstash {“logstash.version”=>”7.3.0”}

[INFO ] 2019-08-22 00:47:04.366 [Converge PipelineAction::Create<main>] Reflections – Reflections took 120 ms to scan 1 urls, producing 19 keys and 39 values

[WARN ] 2019-08-22 00:47:06.116 [[main]-pipeline-manager] LazyDelegatingGauge – A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[INFO ] 2019-08-22 00:47:06.138 [[main]-pipeline-manager] javapipeline – Starting pipeline {:pipeline_id=>”main”, “pipeline.workers”=>1, “pipeline.batch.size”=>125, “pipeline.batch.delay”=>50, “pipeline.max_inflight”=>125, :thread=>”#<Thread:0x257170c8 run>”}

[INFO ] 2019-08-22 00:47:06.328 [[main]-pipeline-manager] javapipeline – Pipeline started {“pipeline.id”=>”main”}

The stdin plugin is now waiting for input:

[INFO ] 2019-08-22 00:47:06.560 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent – Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2019-08-22 00:47:08.277 [Api Webserver] agent – Successfully started Logstash API endpoint {:port=>9600}

hello world!

[INFO ] 2019-08-22 00:47:28.878 [[main]>worker0] file – Opening file {:path=>”/tmp/log-2019.08.22messages.gz”}

^C[WARN ] 2019-08-22 00:47:32.916 [SIGINT handler] runner – SIGINT received. Shutting down.

[INFO ] 2019-08-22 00:47:33.319 [Converge PipelineAction::Stop<main>] javapipeline – Pipeline terminated {“pipeline.id”=>”main”}

[INFO ] 2019-08-22 00:47:34.102 [LogStash::Runner] runner – Logstash shut down.

[root@n7 ~]# ll /tmp/log

log-2019.08.22messages.gz logstash2737950492275461580/ logstash2893378069423413922/

[root@n7 ~]# ll /tmp/log-2019.08.22messages.gz

-rw-r–r– 1 root root 94 Aug 22 00:47 /tmp/log-2019.08.22messages.gz

[root@n7 ~]# tail /tmp/log-2019.08.22messages.gz

{“@version”:”1″,”host”:”n7″,”message”:”hello world!”,”@timestamp”:”2019-08-22T04:47:28.156Z”}

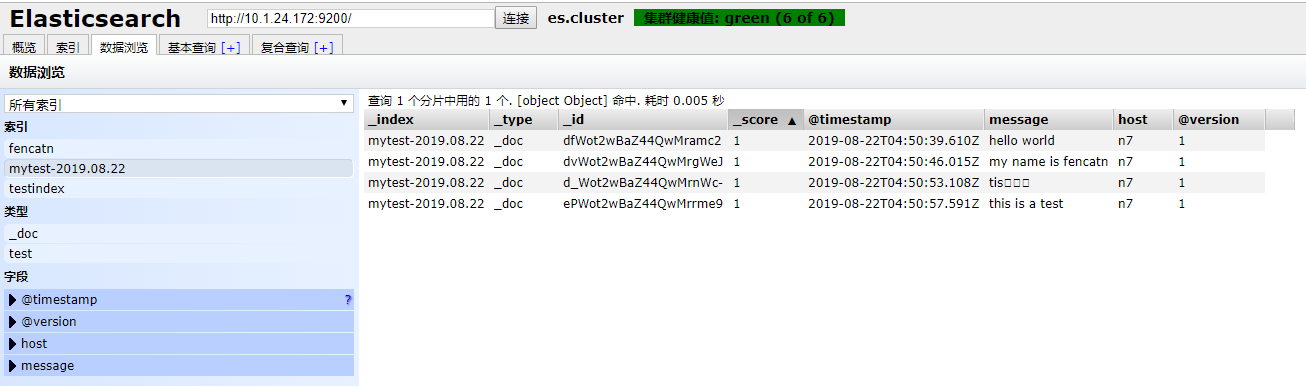

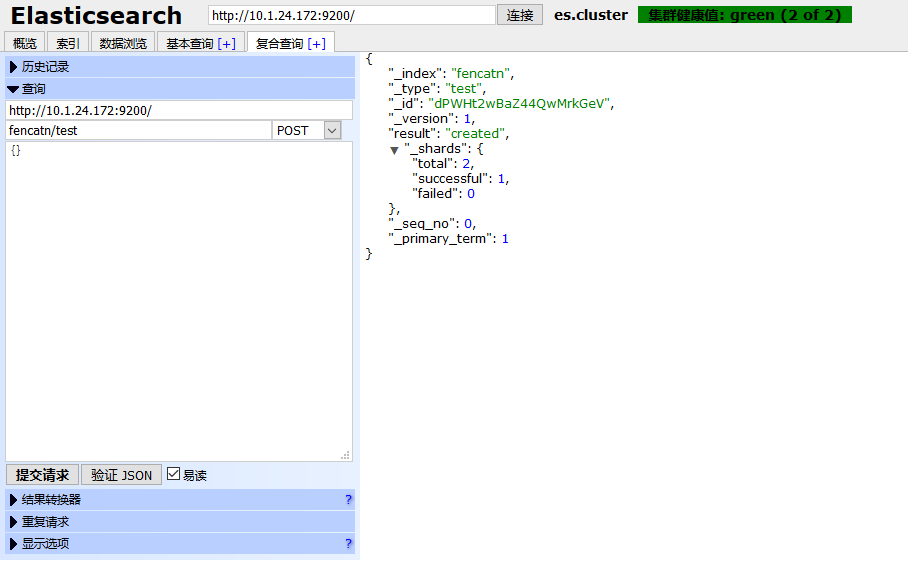

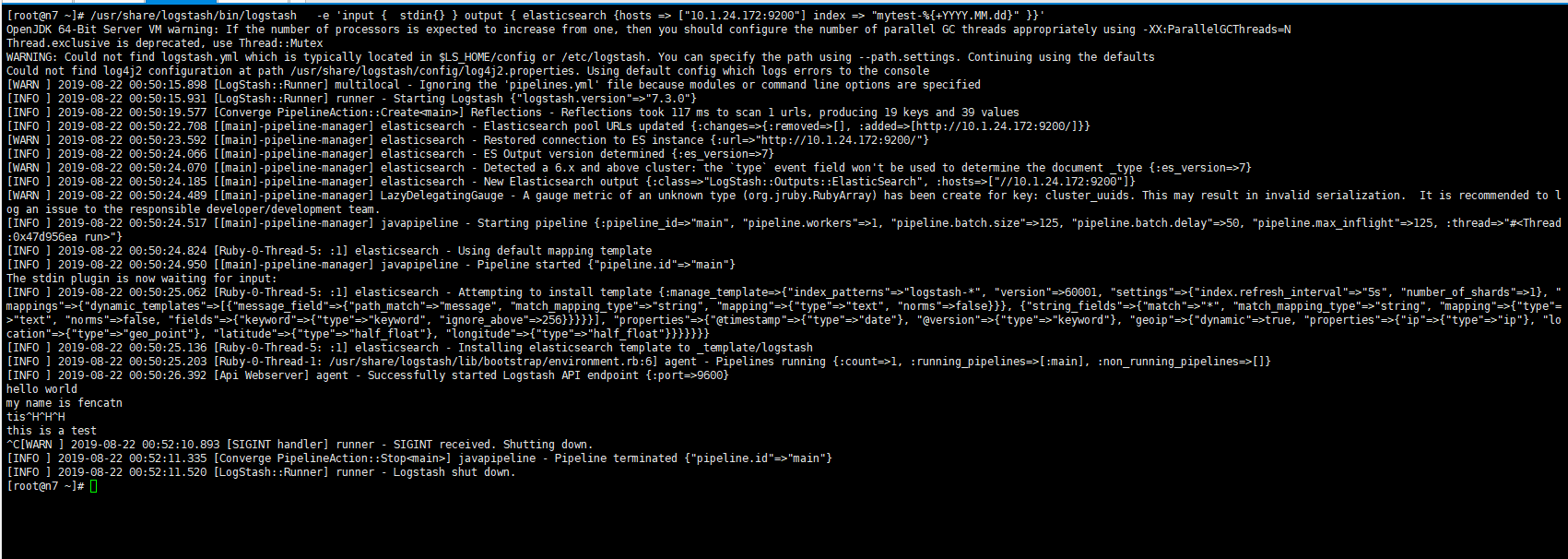

7测试输出到elasticsearch

/usr/share/logstash/bin/logstash -e ‘input { stdin{} } output { elasticsearch {hosts => [“10.1.24.172:9200”] index => “mytest-%{+YYYY.MM.dd}” }}’

[root@n7 ~]# /usr/share/logstash/bin/logstash -e ‘input { stdin{} } output { elasticsearch {hosts => [“10.1.24.172:9200”] index => “mytest-%{+YYYY.MM.dd}” }}’

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Thread.exclusive is deprecated, use Thread::Mutex

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using –path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2019-08-22 00:50:15.898 [LogStash::Runner] multilocal – Ignoring the ‘pipelines.yml’ file because modules or command line options are specified

[INFO ] 2019-08-22 00:50:15.931 [LogStash::Runner] runner – Starting Logstash {“logstash.version”=>”7.3.0”}

[INFO ] 2019-08-22 00:50:19.577 [Converge PipelineAction::Create<main>] Reflections – Reflections took 117 ms to scan 1 urls, producing 19 keys and 39 values

[INFO ] 2019-08-22 00:50:22.708 [[main]-pipeline-manager] elasticsearch – Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://10.1.24.172:9200/]}}

[WARN ] 2019-08-22 00:50:23.592 [[main]-pipeline-manager] elasticsearch – Restored connection to ES instance {:url=>”http://10.1.24.172:9200/”}

[INFO ] 2019-08-22 00:50:24.066 [[main]-pipeline-manager] elasticsearch – ES Output version determined {:es_version=>7}

[WARN ] 2019-08-22 00:50:24.070 [[main]-pipeline-manager] elasticsearch – Detected a 6.x and above cluster: the `type` event field won’t be used to determine the document _type {:es_version=>7}

[INFO ] 2019-08-22 00:50:24.185 [[main]-pipeline-manager] elasticsearch – New Elasticsearch output {:class=>”LogStash::Outputs::ElasticSearch”, :hosts=>[“//10.1.24.172:9200”]}

[WARN ] 2019-08-22 00:50:24.489 [[main]-pipeline-manager] LazyDelegatingGauge – A gauge metric of an unknown type (org.jruby.RubyArray) has been create for key: cluster_uuids. This may result in invalid serialization. It is recommended to log an issue to the responsible developer/development team.

[INFO ] 2019-08-22 00:50:24.517 [[main]-pipeline-manager] javapipeline – Starting pipeline {:pipeline_id=>”main”, “pipeline.workers”=>1, “pipeline.batch.size”=>125, “pipeline.batch.delay”=>50, “pipeline.max_inflight”=>125, :thread=>”#<Thread:0x47d956ea run>”}

[INFO ] 2019-08-22 00:50:24.824 [Ruby-0-Thread-5: :1] elasticsearch – Using default mapping template

[INFO ] 2019-08-22 00:50:24.950 [[main]-pipeline-manager] javapipeline – Pipeline started {“pipeline.id”=>”main”}

The stdin plugin is now waiting for input:

[INFO ] 2019-08-22 00:50:25.062 [Ruby-0-Thread-5: :1] elasticsearch – Attempting to install template {:manage_template=>{“index_patterns”=>”logstash-*”, “version”=>60001, “settings”=>{“index.refresh_interval”=>”5s”, “number_of_shards”=>1}, “mappings”=>{“dynamic_templates”=>[{“message_field”=>{“path_match”=>”message”, “match_mapping_type”=>”string”, “mapping”=>{“type”=>”text”, “norms”=>false}}}, {“string_fields”=>{“match”=>”*”, “match_mapping_type”=>”string”, “mapping”=>{“type”=>”text”, “norms”=>false, “fields”=>{“keyword”=>{“type”=>”keyword”, “ignore_above”=>256}}}}}], “properties”=>{“@timestamp”=>{“type”=>”date”}, “@version”=>{“type”=>”keyword”}, “geoip”=>{“dynamic”=>true, “properties”=>{“ip”=>{“type”=>”ip”}, “location”=>{“type”=>”geo_point”}, “latitude”=>{“type”=>”half_float”}, “longitude”=>{“type”=>”half_float”}}}}}}}

[INFO ] 2019-08-22 00:50:25.136 [Ruby-0-Thread-5: :1] elasticsearch – Installing elasticsearch template to _template/logstash

[INFO ] 2019-08-22 00:50:25.203 [Ruby-0-Thread-1: /usr/share/logstash/lib/bootstrap/environment.rb:6] agent – Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[INFO ] 2019-08-22 00:50:26.392 [Api Webserver] agent – Successfully started Logstash API endpoint {:port=>9600}

hello world

my name is fencatn

tis^H^H^H

this is a test

^C[WARN ] 2019-08-22 00:52:10.893 [SIGINT handler] runner – SIGINT received. Shutting down.

[INFO ] 2019-08-22 00:52:11.335 [Converge PipelineAction::Stop<main>] javapipeline – Pipeline terminated {“pipeline.id”=>”main”}

[INFO ] 2019-08-22 00:52:11.520 [LogStash::Runner] runner – Logstash shut down.

[root@n7 ~]#

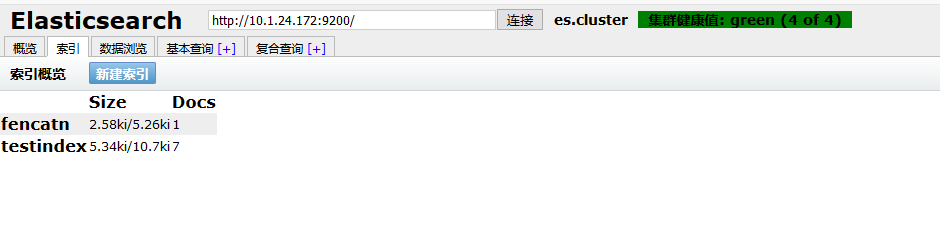

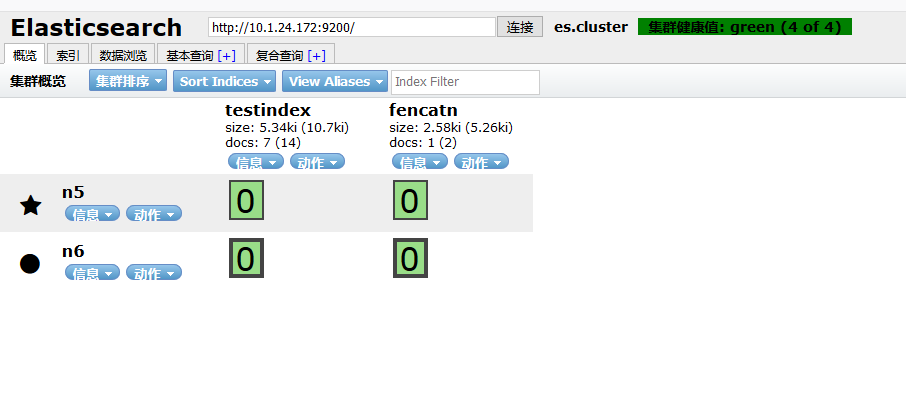

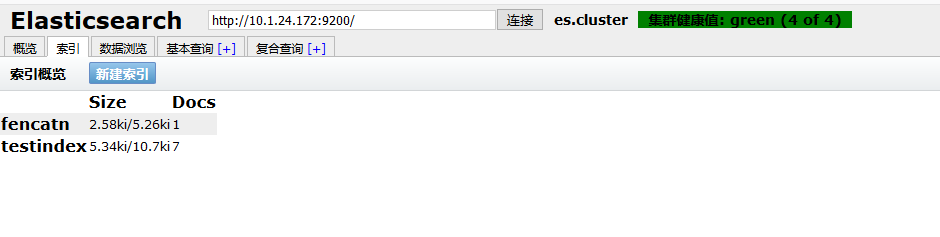

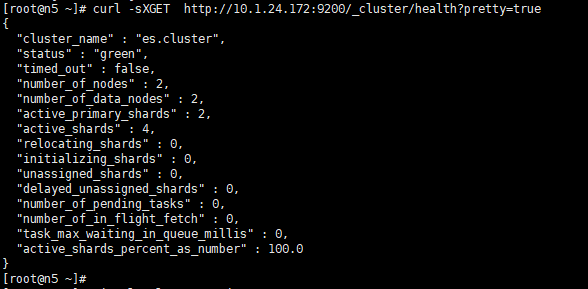

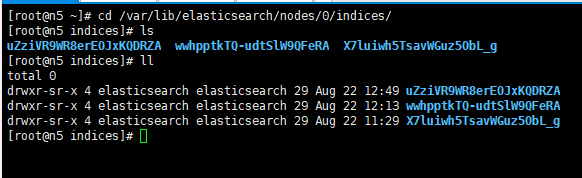

去elasticsearch主机查看下,测试正常

[root@n5 ~]#

[root@n5 ~]# cd /var/lib/elasticsearch/nodes/0/indices/

[root@n5 indices]# ls

uZziVR9WR8erEOJxKQDRZA wwhpptkTQ-udtSlW9QFeRA X7luiwh5TsavWGuz5ObL_g

[root@n5 indices]# ll

total 0

drwxr-sr-x 4 elasticsearch elasticsearch 29 Aug 22 12:49 uZziVR9WR8erEOJxKQDRZA

drwxr-sr-x 4 elasticsearch elasticsearch 29 Aug 22 12:13 wwhpptkTQ-udtSlW9QFeRA

drwxr-sr-x 4 elasticsearch elasticsearch 29 Aug 22 11:29 X7luiwh5TsavWGuz5ObL_g

[root@n5 indices]#

去浏览器查看下,测试正常